[Field report] Data Science Summer School at Ecole Polytechnique (with Bengio, Russell, Bousquet, Archambeau and others)

A small field report with personal viewpoint about the Data Science Summer School (Ecole Polytechnique) Monday, Aug. 28 – Friday, Sept. 1, 2017.

Some event stats (copy pasted from the event website):

- 400 participants

- 220 students (MSc, PhD) & postdocs, 100 professionals

- 16 experts (speakers, guests)

- 30 countries

- 6 continents

- 200 institutions

- 50 companies

- 6 sponsors

- 120 posters

- female : male ratio = 3 : 10

There was a rich program with several prominent experts in deep learning, optimization and reinforcement learning from both industry (Google, Amazon, Microsoft, Facebook) and academia (University of Montreal, Carnegie Mellon University, University of Edinburgh/KAUST, etc.), cf. the detailed program containing links to slides and websites and the speaker/guest list.

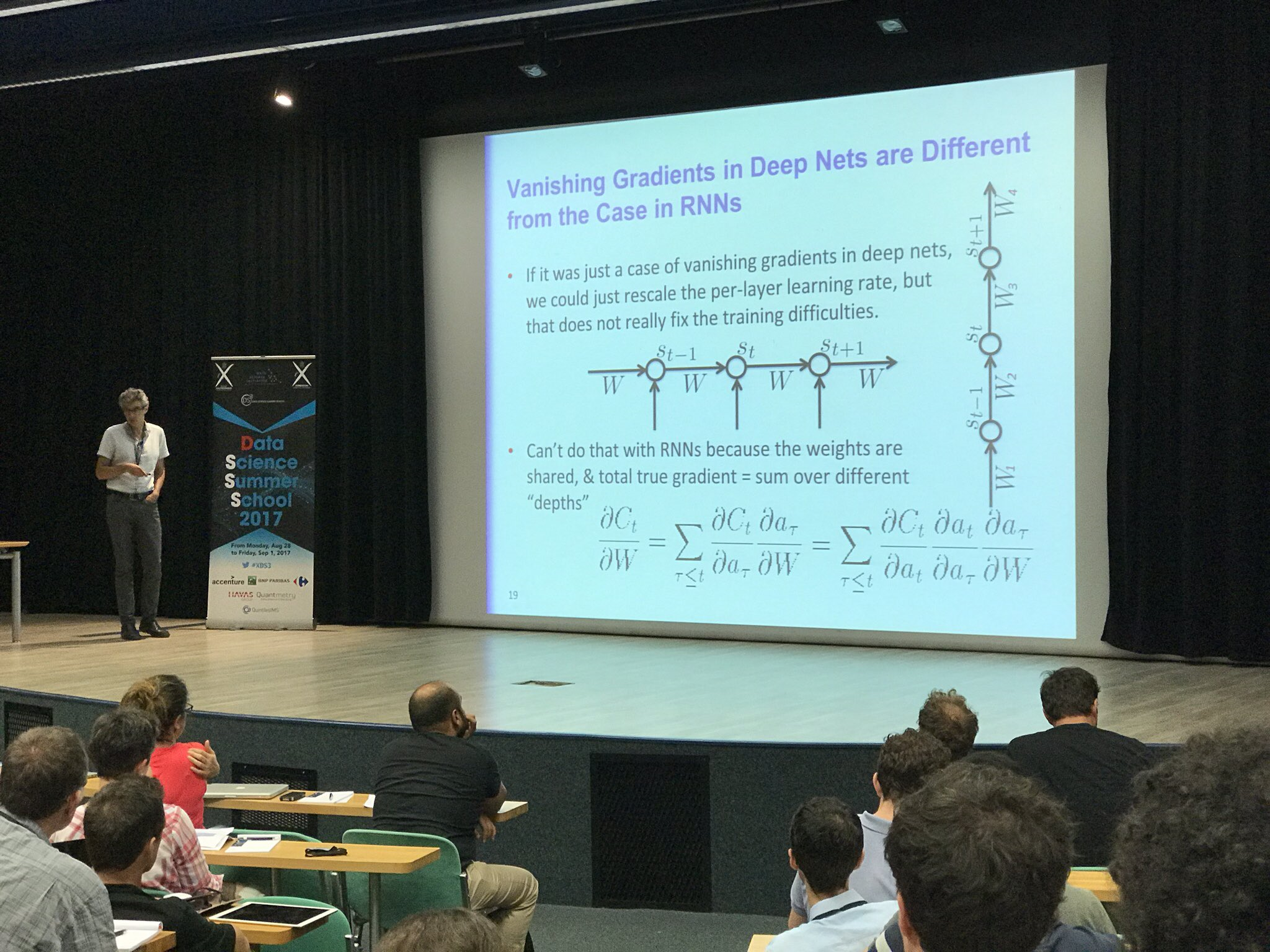

After introductory talks by Ecole Polytechnique president (Jacques Biot) and main organizer of this summer school (Emmanuel Bacry), Yoshua Bengio (one of the father of modern deep learning, with Yann LeCun and Geoffrey Hinton) had a first introductory talk on Deep Learning entitled Deep Learning for AI followed by a series of other talks (Backpropagation, Deep Feedforward NN, Tricks and Tips, RNN and NLP). His talks were quite general and about the state of the art of good practices (tricks and tips), what can be done currently with deep neural networks, what’s hot in research, and the next frontier.

Yoshua Bengio talks takeaways:

- idea of compositionality

- distributed representations / embeddings

- hierarchy of features

- human level abilities in vision and speech recognition capabilities

- NLP is improving, but much is left to be done

- five key ingredients for ML towards AI:

- lots of data,

- very flexible models,

- enough computing power,

- computationally efficient inference,

- powerful priors

- new stuff in Deep Learning

- unsupervised generative neural nets, e.g. GANs

- attention mechanism using GATING units, e.g. for Neural Machine Translation

- memory-augmented networks

- curriculum learning

- 0-shot learning using maps between representations

- using different modalities (image, text, sound)

- multi-task learning (classification, regression, image to text, image to sound)

- for sequence data, to handle very long-term dependencies with RNNs, use multiple time scales

- disentangling the underlying factors of representations, concretely deep learning yields a linearized space of the original data so that you can do linear algebra on your images, words, etc.

- to the rather important but difficult question “How much data is needed?” Yoshua gave a rather simple but disappointing answer: “plot the empirical curve ‘accuracy vs. sample size’; collect data as long as it improves accuracy”.

Then a talk on Reinforcement Learning by Sean Meyn Hidden Theory and New Super-Fast Algorithms with a strong perspective from stochastic approximation. Very technical talk. I did not understand much and I am pretty confident that a majority of the public either.

Sean Meyn talks takeaways:

- for reinceforcement learners, learn stochastic approximation rather than rediscovering particular cases

Monday afternoon was focused on data science for smart grids and energy market. I do not know much about this domain yet, so I did not enjoyed it as much as I would have liked to. At the round table, there were prominent speakers such as EDF R&D director.

Pradeep Ravikumar presented a course on probabilistic graphical models (Representation, Inference, Learning). Very similar to the one I followed at the master MVA with Francis Bach and Guillaume Obozinsky (both former PhD students of Michael Jordan). If I find some time I will try to implement and fit some PGM-based yield curve model.

Pradeep Ravikumar talks takeaways:

- PGMs are a convenient way to store distributions and perform inference

- Python Library for Probabilistic Graphical Models

- Short Tutorial to Probabilistic Graphical Models (PGM) and pgmpy

Peter Richtarik did a course on Randomized Optimization Methods.

Peter Richtarik talks takeaways:

- 8 tools in the standard algorithmic toolbox in optimization:

- gradient descent

- acceleration

- proximal trick

- randomized decomposition (Stochastic Gradient Descent (SGD) / Randomized Coordinate Descent (RCD))

- minibatching

- variance reduction

- importance sampling

- duality

Stuart Russell (as in the famous ‘Russell and Norvig’ AI book) presented his BLOG approach. He noticed that statistics had for long ignored syntax which is very present in (theoretical) computer science and logic. The two latter fields (CS, logic) had for long been the major approach for AI (cf. IJCAI and AAAI proceedings) and were highlighted in the first editions of his book as such (e.g., graph traversals, prolog). His idea is to couple both statistics and logic. Concretely, introduce stats in a prolog-like language / introduce first-order logic syntax in PGM-like language.

Stuart Russell talks takeaways:

- BLOG (the name of his stat/prolog hybrid model) can be used for extraction of facts / completely unsupervised text understanding

- BLOG is used currently at the UN to distinguish between earthquakes and underground nuclear tests.

Csaba Szepesvari presented a very pedagogical course on bandits (part 1, part 2, part 3). The course was accompanied with great materials.

Csaba Szepesvari talks takeaways:

- his website banditalgs.com to play with bandits

Olivier Bousquet, Head of Machine Learning Research at Google, presented impressive advances in Deep Learning, especially in the ‘learn 2 learn’ approach, cf. talk 1, talk 2.

Olivier Bousquet talks takeaways:

- todo: watch WarGames

- learn 2 learn

- leverage a network to build another one by using some reinforcement learning feedback from the loss of the one which is being built

- AutoML: learn automatically

- the architecture

- the kind of gradient descent

- the loss function

- TensorFlow Research Cloud

- the TPUs, tensor processing units, basically bunch of GPUs specialized for matrix multiplication that Google has put in the Cloud for Deep Learning research

Then a round table on the future of AI. Interesting, but I did not get much new ideas. Stuart Russell gave the impression that he was eager to discuss more in-depth but format of this round table was too short. Many of the mentioned topics are dealt in-depth in gov/parliament/academics related documents (cf. the One Hundred Year Study on Artificial Intelligence (AI100), or for French speakers, the rather exhaustive French Senate report on AI pointing towards the US/EU/UK ones).

To conclude the day, a nice banquet, with pastries by Chef Philippe Conticini.

Sebastian Nowozin, from Microsoft Research, talked about unsupervised Deep Learning.

Sebastian Nowozin talks takeaways:

- works on theory of GANs ongoing

- f-divergence (e.g. Kullback-Leibler is a particular case) are useful to obtain principled GANs

Cédric Archambeau gave two talks:

- one that showcased ML Research at Amazon,

- another one on Bayesian Optimisation for finding good hyperparameters (e.g. for Deep Learning) with Gaussian processes underneath.

Cédric Archambeau talks takeaways:

- mentioned great material from amazon

- Amazon AI blog

- a neural machine translation open source tool: sockeye, which can be used to perform any kind of ‘sequence to sequence with attention’ learning

- DeepAR: Probabilistic Forecasting with Autoregressive Recurrent Networks, recent paper which fits my personal interest on autogressive time series, I should try to compare to our approach Autoregressive Convolutional Neural Networks for Asynchronous Time Series; since DeepAR is a generative model, we can build confidence intervals by sampling multiple paths from the model and measuring appropriate statistics

- Archambeau mentioned techniques to do ‘data augmentation’ on time series by drawing different windows of varying positions and lengths; I did not looked at the details yet but it reminds me of the stationary block bootstrap approach (1994).

- at Amazon, they prefer to use the MXNet deep learning framework

- they have a recent association with guys from GPyOpt (non parametric bayesian, gaussian processes)

Finally, Laura Grigori. She works on linear algebra algorithms that minimize the communications as it is really the bottleneck in the highly distributed architectures, e.g. several GPUs. At the moment the gain in speed is sublinear in the number of GPUs due to the algos communication requirement.

Laura Grigori talks takeaways:

- thanks to these guys, we may get even more computing power :)

A few other noticeable stuff:

- lots of posters at the poster sessions

- the Ecole Polytechnique Data Science Initiative and its burgeoning GitHub

- the tick library for Hawkes and other point processes

- met with friends :)