[HKML] Hong Kong Machine Learning Meetup Season 2 Episode 8

[HKML] Hong Kong Machine Learning Meetup Season 2 Episode 8

When?

- Wednesday, July 15, 2020 from 7:00 PM to 9:00 PM

Where?

- At your home, on zoom. All meetups will be online as long as this COVID-19 crisis is not over.

Thanks to our patrons for supporting the meetup!

Check the patreon page to join the current list:

Programme:

Scott Fullman ML Lead @ Ascent - Snorkel: Weak Supervision for NLP Tasks

Snorkel is a framework for weak supervision. This framework enhances productivity in custom Natural Language Processing tasks by enabling fast labeling at scale.

Snorkel helps when

- there are no training labels readily available,

- no suitable pre-trained models,

- only expensive experts could label the data correctly, which is often a prohibitive cost.

This frequently happens for NLP tasks which are domain specific, and use a complex jargon: For example, legalese for Scott’s use case; sentiment and information extraction in finance.

Scott’s slides describe the use of the Snorkel framework, and point toward a few links and papers to go deeper in its understanding.

Note that I also experimented with Snorkel some time ago to build a sentiment on portfolio managers’ blog posts heavy in credit derivatives jargon.

I detailed this attempt in the following posts:

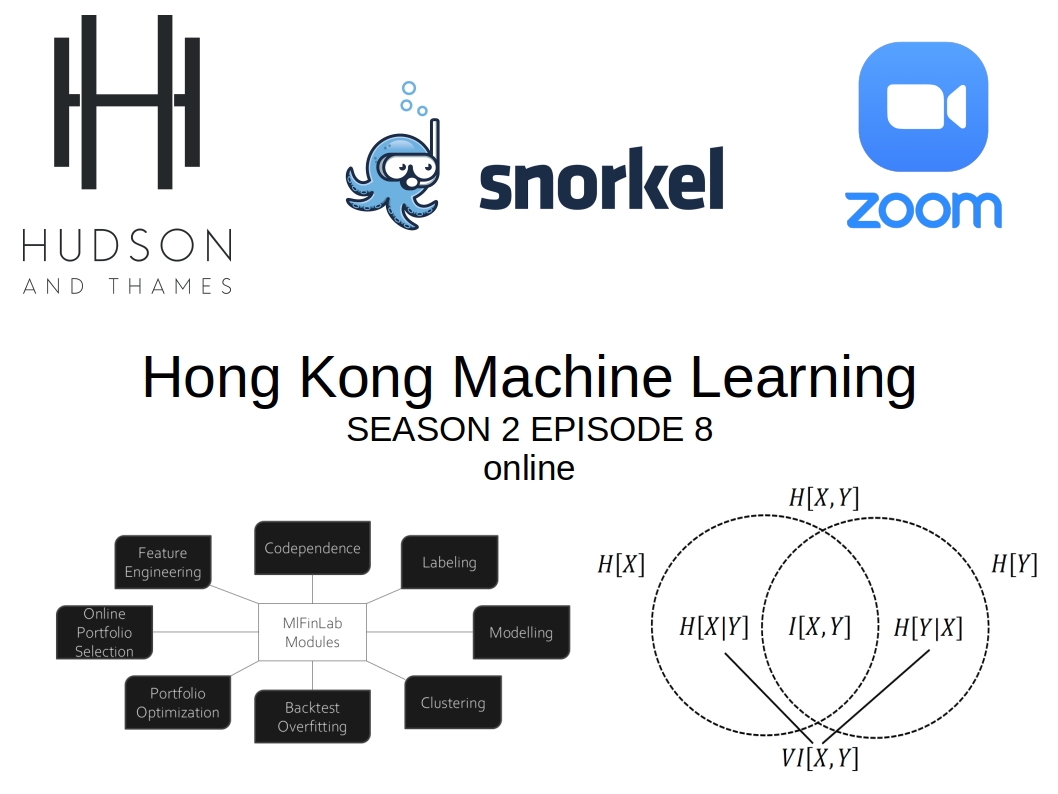

Illya Barziy - Codependence with MlFinLab

Illya will present the MlFinLab package which implements Machine Learning tools for Finance, largely based on the work of Marcos Lopez de Prado. Amongst the many modules (feature engineering, labeling, portfolio optimization, backtest overfitting, …), Illya will present one in particular: Codependence.

Website: https://hudsonthames.org/ GitHub: https://github.com/hudson-and-thames

Some personal thoughts based on my PhD, and ongoing, research:

- Slide 8/23, “Correlation is typically meaningless unless the two variables follow a bivariate Normal distribution.”

I don’t fully agree with this statement. Rank correlation coefficients such as Spearman’s rho are well suited for elliptical copulas (a bivariate Normal distribution is a particular case of an elliptical distribution). Copulas between stocks returns are typically elliptical ones (i.e. no fancy non-linear patterns to detect, only some outliers making the estimation of Pearson’s correlation harder, and possibly not meaningful at all). So, if the goal is to measure comonotonicity, Spearman’s rho or Kendall’s tau are good enough for that purpose.

- Slide 10/23, “For instance, the difference between correlations (0.9,1.0) is the same as (0.1,0.2), even though the former involves a greater difference in terms of codependence.” – Lopez de Prado

cf. my research on geometries for copulas to understand more precisely the remark above:

-

a blog post How to define an intrisic Mean of Correlation Matrices in a Riemannian sense?

-

a short research note OPTIMAL TRANSPORT VS. FISHER-RAO DISTANCE BETWEEN COPULAS FOR CLUSTERING MULTIVARIATE TIME SERIE, and associated slides

-

Exploring and measuring non-linear correlations: Copulas, Lightspeed Transportation and Clustering, and a blog post with code:Measuring non-linear dependence with Optimal Transport

-

Slide 12/23, mutual information can be tricky to estimate, and its most standard estimator is so imprecise and brittle to outliers/tails that it defeats its original purpose of being able to robustly measure dependence. However, there are better but relatively unknown alternative estimators which fix this problem. Such an estimator is based on a relationship between mutual information and copula entropy: Mutual Information Is Copula Entropy.

-

Slide 13/23, GNPR, which is both a representation and a distance, aims at capturing more information than just codependence between two variables. GNPR is based on copulas and the Sklar theorem, which states that a multivariate joint distribution can be written as a joint of uniform variables in $[0, 1]$, with the uniform variables obtained by applying to each one of these variables its cumulative distribution function. So, the whole information of the multivariate distribution can be decomposed into a copula, and a tuple of margins. This decomposition is interesting for clustering variables (e.g. stocks based on their time series of returns) because it takes into account their dependence (through the copula), and the marginal behaviour of the returns. In practice, GNPR helps to craft clusters which will contain stocks which are both highly correlated, and whose returns have the same behaviour. If two stocks are highly correlated, but one has returns that are roughly normally distributed, whereas the other one has jumpy negatively skewed returns with fat tails, they probably should not be considered as belonging to the same cluster from a risk/investment perspective.

-

Slide 16/23, “In some situations due to numerical reasons the estimated covariance matrix cannot be inverted. Shrinkage is used to avoid this problem.”

Shrinkage is starting to get old. There are better techniques available now, for example, using Random Matrix Theory (slide 18/23) or projecting an empirical correlation (not PSD) to its closest correlation matrix (PSD).

- Slide 20/23, idea of the Theory-Implied Correlation (TIC) is similar to the Hierarchical PCA from Marco Avellaneda, i.e. guiding the algorithm working with noisy data with a priori information (such as a GICS classification).

Most of Marcos’ material can be found in his SSRN articles, and his new book that I recently read and commented: Machine Learning for Asset Managers.