[ICML 2018] Day 1 - Tutorials

[ICML 2018] Day 1 - Tutorials

The first day of the ICML 2018 conference consisted in three tutorial sessions of three tutorials in parallel each (cf. the full list here).

These tutorials were:

- Imitation Learning

- Learning with Temporal Point Processes

-

Machine Learning in Automated Mechanism Design for Pricing and Auctions

- Toward Theoretical Understanding of Deep Learning

- Defining and Designing Fair Algorithms

-

Understanding your Neighbors: Practical Perspectives From Modern Analysis

- Variational Bayes and Beyond: Bayesian Inference for Big Data

- Machine Learning for Personalised Health

- Optimization Perspectives on Learning to Control

From these tutorials, I attended the following (and the reason why):

- Learning with Temporal Point Processes:

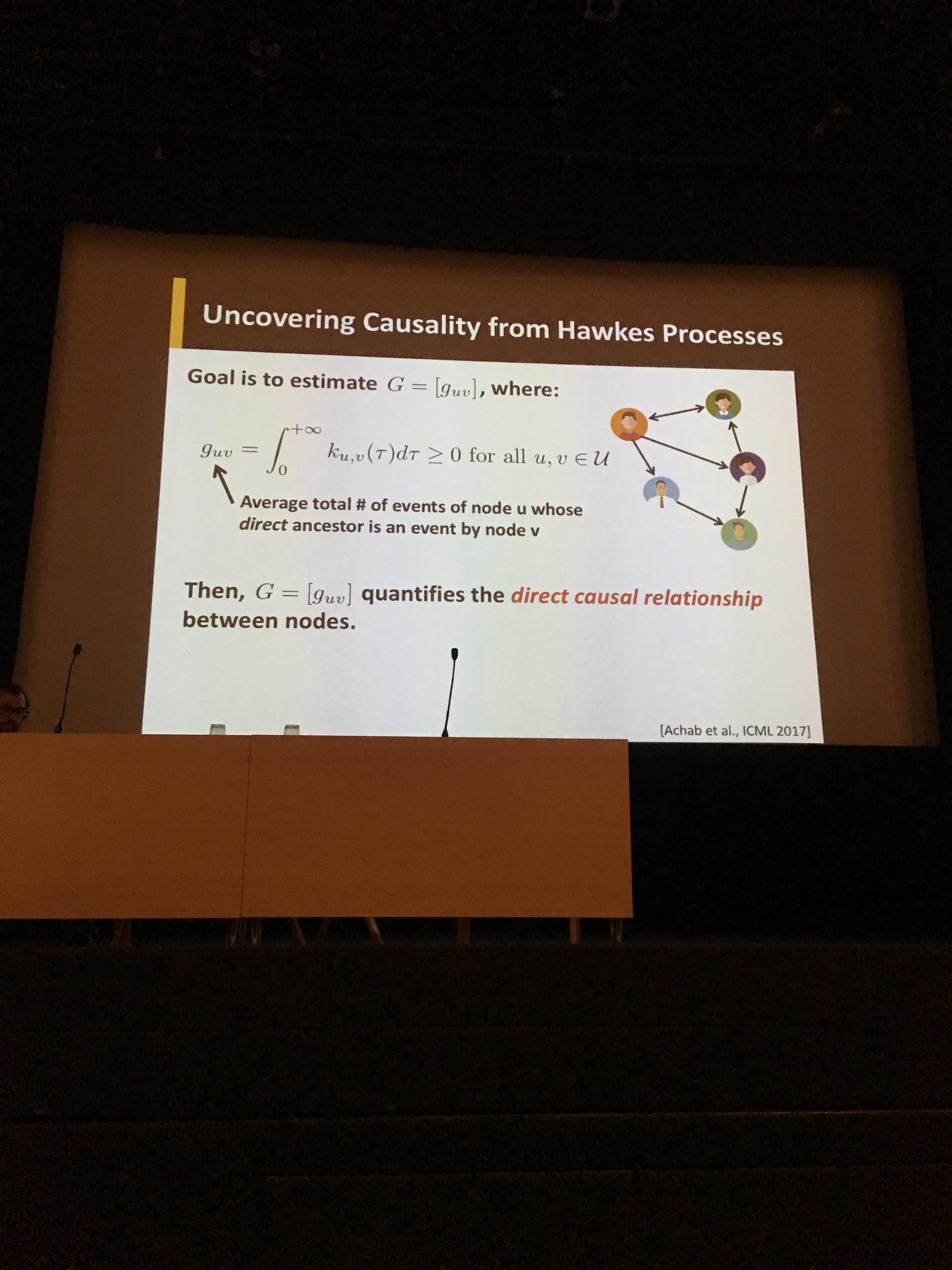

- Working in finance, I have obviously an interest in modelling time series from a stochastic point of view: Temporal Point Processes offer a convenient way to model time series with asynchronous / irregularly sampled observations. The approach commonly used consists in aggregating the observations over an interval. Such approach leads to several impractical questions such as how long should the interval be, or how to deal with empty intervals, are results robust to these arbitrary parameters? Many RNNs deep learning approaches suffer from this problem too. On the contrary temporal point processes are defined on continuous time. After reviewing a couple of applications as illustrations, e.g. time series of edits in Wikipedia pages, answers to a question in Quora, type and temporal clustering of questions asked on stackoverflow by a student during his college time), the speakers presented the building blocks of temporal point processes: the intensity function (to be understood as the rate of events, i.e. the number of events per unit of time), and basic processes such as the Poisson process (constant rate), the inhomogeneous Poisson process (the intensity function is a function of t, but independent of history), the terminal (or survival) process (the intensity function is some function of t up to some time t_s (a certain number of events have happened), and after this time t_s it takes only 0 value), and finally the self-exciting (Hawkes) process whose intensity is stochastic and history dependent (this process is convenient to model clustered or bursty occurrence of events). I have already palyed a bit with the latter, specifically to try to establish a competitive approach to our work on multivariate asynchronous autoregressive time series of quotes from the CDS market (cf. our ICML 2018 paper on the subject). Then, they show how to combine all these processes to model and infer from funky processes with applications in viral marketing, optimal repetition policy to learn a language, dealing with epidemics, etc. I may do a small project on the wikipedia edits of pages dealing with companies in a near future using the presented approaches, and probably blog about it. Maybe also try to model the bursts in trading CDS indices (thanks to OTCStreaming database of reported trades (free data, a bit more info here)).

- Toward Theoretical Understanding of Deep Learning

- This tutorial reviewed the recent development in the theory of deep learning. In short, no deep results yet. This is considered so hard that one effective approach to tackle this problem is to simplify the object of study (deep neural networks) by removing what are thought (but maybe wrongly!) their principal characteristics: depth and non-linearity. Some experiments showed that in fact depth is not that much about expressiveness of the model but more on easing the optimization of the loss function. To obtain theorems (or semi-theorems), studying deep neural networks with no non-lineary (f(x) = x) seems the most promising way so far. Otherwise, some common sense results such as the birthday paradox (you only need to have 23 persons in a room so that there is a probablity higher than 1/2 that at least two persons have their birthday the same day), yield results on the effective capacity of a network and its generalization (for example, you only need ~250,000 (« 7,000,000,000 people on earth) face images so that a GAN model can generate all possible faces). We still do not know why a 20 million parameter (VGG19) model trained on a relatively small dataset (CIFAR10 has 50,000 images) does not overfit. Some tentatives to obtain the real capacity (intrisic dimension of the network), but we are maybe still far from obtaining tight results or bounds, perhaps some insights in the process… In the linear case, high eigenvalues localize in a particular layer. The layers are robust to noise injection, but why? Because of the noisyness of the stochastic gradient descent? Something else? The speaker did not review the `physicist’ approach (e.g. the renormalization group idea). Finally, the speaker insists that pure theory in deep learning is not realistic and that the theoretic research should experiment a lot (more).

- Machine Learning for Personalised Health

- Besides being an interesting and very interesting topic, I went to this session for its similarity to the world of quant finance: you have ``small’’ datasets with noisy and scarce label, a very complex system where causality is not obvious to determine, alike in finance a bad decision incurs a huge cost (actually much more than in finance: loss of life and health vs. loss of money), the field is strongly regulated: you have to be able to explain your decisions and be accountable for them, you face practitioners who are not confident in these relatively new techniques and prefer some good story telling (even if backfitted), etc. I were expecting a few trick of the trades to deal with all these problems, but the tutorial was actually more of a high level overview of the challenges. What I keep from it in a nutshell: unsupervised and discriminative methods are nice to gain some basic knowledge or an intuitive on problems and data you do not know well, then you have to switch to probabilistic models that you can somewhat explain and tweak with human / expert priors. Probabilistic graphical models are great for that. Causality is the new black: A rising topic in recent years at conference such as ICML or NIPS + the Pearl paper Theoretical Impediments to Machine Learning With Seven Sparks from the Causal Revolution.

On a non-technical side note, lots of top tech companies were showing off at their booth and a couple of quant funds (cf. the page of sponsors). The game for many people today was to get invites to the ``secret parties’’ organized by these companies before they (rather quickly) get full.

My program for tomorrow (an attempt):

- Transfer and Multi-Task Learning + CoVeR: Learning Covariate-Specific Vector Representations with Tensor Decompositions

- Gaussian Processes

- Deep Learning

- Networks and Relational Learning